Cutting-edge IT trends for 2018: Cloud, Big Data, AI, ML and IoT

-

4788

-

0

-

0

-

0

2018 might well become the year of huge leaps in predictive analytics, AI, edge computing, data storage, hybrid cloud and much more… Learn about the hottest IT trends for 2018!

2017 was the year the IT industry matured and expanded beyond any previous limits, as the cloud service providers like AWS introduced the next generation of cloud instances, data storage, and processing solutions, more potent and cost-efficient. While some Big Data tools and ML technologies became obsolete and had to be dropped, new, more feature-rich and productive tooling (like Spark) comes to their place.

The term Big Data itself is somewhat excessive right now, as we know the data in question is huge by default. This is why many IT experts and credible sources largely use just “data” instead. As using the data analytics efficiently is crucial for successful business decision-making, the whole pack of IT industry branches is centered around high-velocity data aggregation, inexpensive data storage, high-speed data processing and high-accuracy data analytics. Let’s take a closer look at the cutting-edge tech trends for the businesses in 2018.

IoT for high-velocity data aggregation

Data lakes used for Big Data analytics have multiple inlets, like social media, internal data flows from CRM/ERP systems, accounting platforms, etc. Nonetheless, when we add IoT sensors to the mix the complexity grows tenfold. In order to be able to extract valuable insights from this data, it must be aggregated and processed quickly and the amount of data inserted should be kept at the lowest level appropriate.

For example, when collecting the Industry 4.0 data from fully-automated factories, there might be hundreds of temperature sensors scattered across the facility, which will be transmitting the same temperature data in normal mode. In this case, it is logical to cut off 99% of data and report only that the temperature was nominal. Only if (when) the temperature spike happens, should the edge-computing system react, locate the sensor that raised the alert, analyze the situation and act appropriately.

As another example, let’s assume we have a wind power plant with multiple wind turbines rotating under the edge computing system control. If the wind blast brings small gravel that can damage the rotor bearings, the first turbine hit reports of the impact, the system identifies the threat and responds by ordering the rest of the turbines to rotate their fans in a direction allowing to avoid a collision.

Inexpensive data storage in the cloud

Cloud storage is a must for data lakes, as only leveraging the cloud computing resources allows unleashing the full potential of business intelligence and Big Data analytics systems. However, when the data in question is huge, so will be the expenses on its storage. While many cloud service providers like AWS or GCP work hard on minimizing the data storage expenses, they still remain substantial. The questions of data security also raise certain concerns, as multiple departments gain access to the cloud and strict security protocols should be applied to ensure the safety of data at work.

See also: Demystified: 6 Myths of Cloud Computing

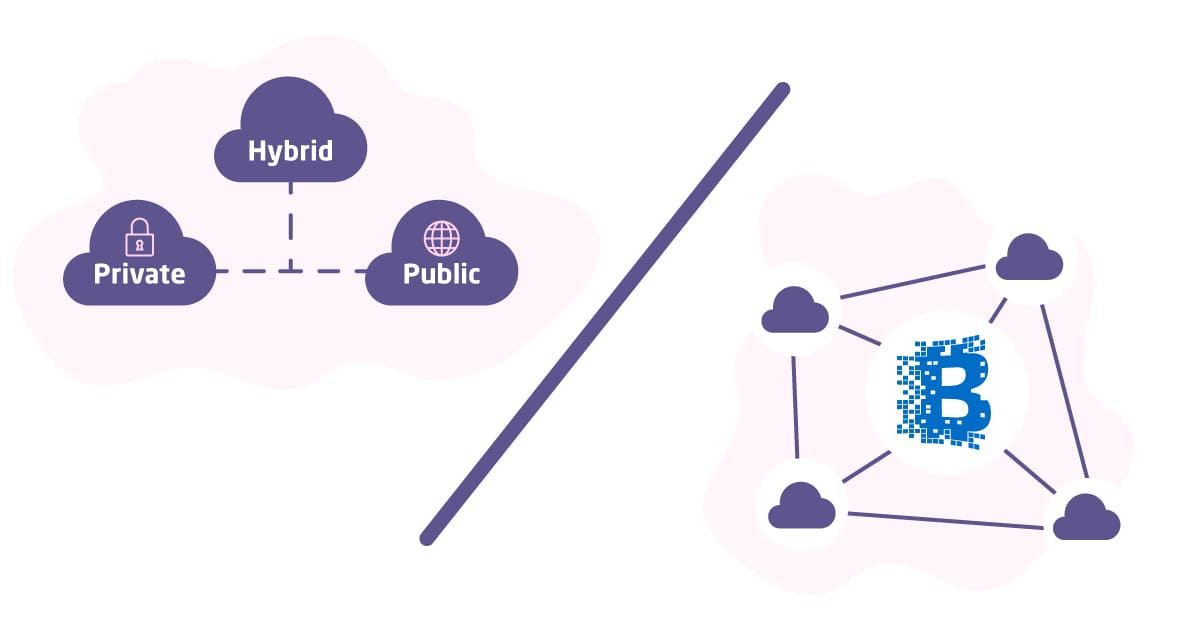

One possible solution is going for hybrid cloud strategy and combining the granular access right provided by on-prem infrastructure with immense and easily-scalable computational power of the public cloud. The other approach lies within using blockchain-powered cloud storage options, as some pilots proved to provide 90% cost reduction as compared to AWS.

High-speed Big Data processing

The Big Data solutions provider Syncsort has published a survey of the Big Data challenges and issues faced by the enterprise businesses. One of the key findings of that survey is the fact nearly 70% of respondents mentioned the implications with ETL, meaning they struggle to process the incoming data fast enough to keep their data lakes fresh and relevant.

Real-time and predictive analytics that are required to provide solid ground for on-point business analytics demand polished data processing workflows, while over 75% of the Syncsort survey respondents acknowledged they need to be able to process data more rapidly. Big Data visualization models allow providing the analytics results in a clearly comprehensible form.

AI and ML used for high-accuracy data analytics

The main direction AI and Machine Learning (ML) development is taking today is the improvement of the ways the humans interact with computers by writing special algorithms. These algorithms allow to automate the routine work or improve the results of the tasks where the outcomes are traditionally highly dependent on human skills. 2017 saw great accomplishments in the ML areas like text translation, optical image recognition, and various other projects.

See also: Deep Learning summary for 2017: Reinforced Learning and Miscellaneous apps

Amazon’s prediction engine is intended to provide better service to the customers, yet as of now, its accuracy is quite low, around 10% at best. In the end of 2017, AWS joined forces with Azure to develop a new-generation AI platform, Gluon API. By outsourcing the platform AWS and Azure hope to encourage AI developers of any skill level to produce more clean and efficient AI algorithms.

Final thoughts on the cutting-edge IT trends for 2018

2018 will definitely be the year multiple cloud, Big Data, IoT and AI/ML projects enter the production-grade phase. Tools like Spark, JupyteR, Gluon and others will find their way to the hearts and toolkits of the enterprise specialists. People responsible for the digital transformation and increased efficiency of Business Intelligence & Big Data analytics in their companies must keep a close eye on these trends and adopt the solutions once they are ready.

What’s your opinion? Did we miss any astonishing project in these areas? What is your first-hand experience with data lakes management and data analytics? Please share your thoughts and opinions in the comments below!