How to create a self-healing IT infrastructure

-

10064

-

0

-

1

-

0

Automation of routine tasks paves the way to creating truly self-managed environments, where the system itself handles the configuration. Today we discuss how to create self-healing IT infrastructure.

There always is some transition from here to there, some evolutionary process. For example, the next big thing in the automotive industry is the worldwide acceptance of self-driving cars. Despite certain fatal failures from Elon Musk’s Tesla autopilot, Ford plans to produce self-driving cars and Daimler-Benz is already testing self-driving trucks. These manufacturers act according to a 5-step plan to achieve driverless cars.

The hard part of the transition is the attitude shift — the drivers must accept the role of passengers, not the masters of the road. The benefits are supreme, though — fully-automated delivery system working 24/7 and ensuring fewer car crashes and human casualties on the roads. The road to this utopia might seem long, yet the automotive giants cover it with seven-league steps.

5 steps to a self-healing IT infrastructure

What about the IT industry though? The obvious direction of evolvement there is automation. When more and more routine tasks is automated, work hours and resources can be allocated to improving the infrastructure and increasing its performance, not to manually solving numerous tedious tasks. Building the self-healing IT infrastructure capable of performing the routine tasks automatically would simplify the DevOps workflows greatly. The bad thing is, there is no industry-defined roadmap to achieve this state of software delivery. Today we explain our vision on how to create self-healing IT infrastructure.

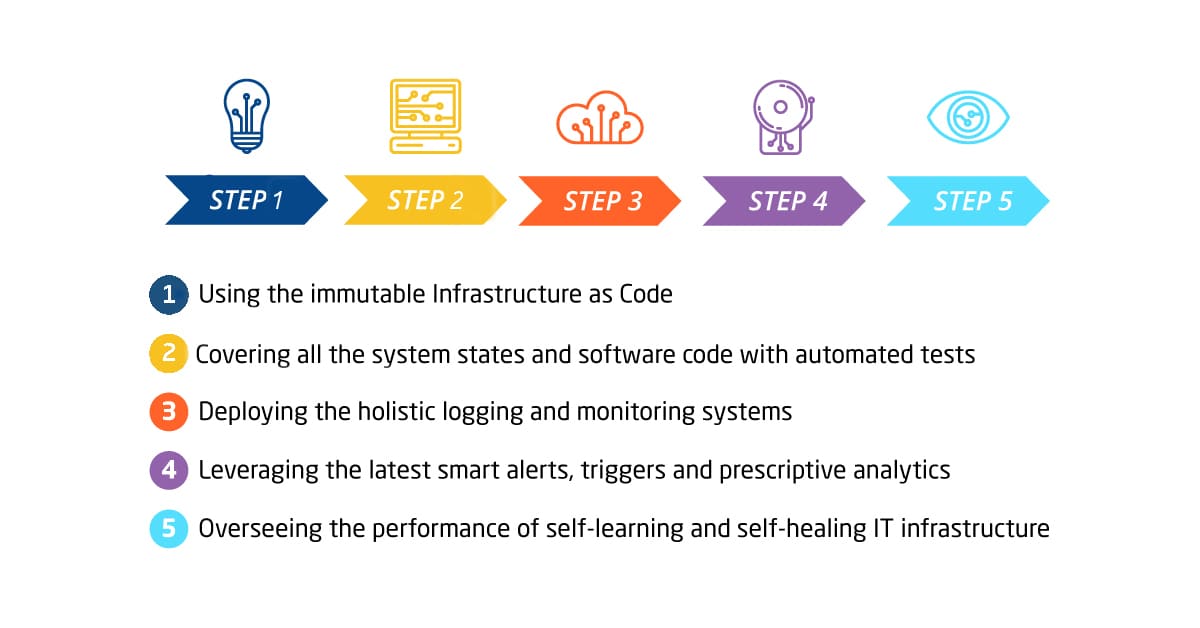

This is the short roadmap of a long process that is most likely going to take around 5-10 years:

- Using the immutable Infrastructure as Code

- Covering all the system states and software code with automated tests

- Deploying the holistic logging and monitoring systems

- Leveraging the latest smart alerts, triggers, and prescriptive analytics

- Overseeing the performance of self-learning and self-healing IT infrastructure

Below we cover these steps in more details.

Immutable IaC as the basis of a self-healing IT infrastructure

Automated testing as the key to keeping the codebase efficient

Logging and monitoring are the keys to self-healing infrastructure

Smart alerts, triggers, and prescriptive analytics

Overseeing the performance of a self-healing IT-infrastructure

Final thoughts of the steps to create self-healing IT infrastructure

Despite this utopian picture, it is least likely the layman DevOps teams will lay their hands on such systems any time soon. The human and financial resources needed to develop a system required to implement this approach is far beyond the reach of an average business. Thus said, as always we have to wait and hope that the industry giants like AWS or GCP will create the platforms similar to AWS Lambda or Kubernetes and open-source them. Only when — and if— it is done, the DevOps talents worldwide will be able to benefit from using the self-healing IT infrastructure.

What do you think on the aforementioned evolutionary process? On what stage of this path does your company or organization currently seems to be? Do you plan to move to the next stage soon? Please share your thoughts and opinions in the comments section below!