Big Data: hot trends and implementation results of 2018

-

5547

-

1

-

6

-

0

While 2018 is quickly coming to an end, data scientists and analysts worldwide look around to see what trends dominated the Big Data landscape and to learn the results of the business implementation of Big Data solutions.

This article provides a brief overview of the opinions expressed by multiple data experts, analysts, mentors, and engineers. These comments were given nearly a year ago, and it is interesting to see how many of them came true.

First of all, while there is still much discussion regarding what Big Data is and how it is best used, many thought leaders insist the term is not correct anymore. We can simply say data, as it is by default implied and understood, that the scopes of data generated and processed by modern digital businesses are huge. This was reflected by Gartner back in 2015, who have removed the term Big Data from their Gartner Curve.

However, the vast majority of people are not thought leaders, and they still perceive Big Data as an umbrella term for Artificial Intelligence (AI) and Machine Learning (ML)-empowered business analytics. To be honest, this is the predominant way of applying Big Data solutions, as companies from oil and gas industry, telecom, pharmaceutics, consumer electronics, are actively attempting to implement IoT, AI, and ML capabilities into their products and services.

I list some of these opinions here and later support them with brief descriptions of 2018 Big Data use cases from various industries. I do not recite the texts verbatim, but merely highlight the main idea of the message.

Big Data trends of 2018: opinions from the visionaries

Marcus Borba, a CEO @ Borba Consulting, said that “Big Data stops being a buzzword and becomes the widely-accepted approach of adding IoT, AI algorithms and ML solutions to business intelligence and analytics processes. The startups become data-driven and implement the cloud-first mode of operations for their data analytics, finding new ways to drive more value to their businesses and deliver more value to their customers.”

Craig Brown, Ph.D., a mentor, technology speaker, coach, and Big Data expert said that “in 2017 the companies have largely reassessed their Big Data initiatives to move away from Hadoop and concentrate more on Big Data visualization, management and implementing hybrid cloud environments for data processing. He predicted 2018 to be the year when data streaming and on-the-fly analytics will soar, along with more detailed and productive NoSQL usage.”

Meta S. Brown, an author of Data Mining for Dummies expressed her view that “on the example of Panama papers we can see, how modern data analytics can uncover the corruption among the powerful and the rich. Thus said, the success in using the data analytics depends not on magic, but on the clearly defined process, diligence and the will to cooperate from all the parties involved.”

Vasant Dhar, a Professor at NYU, the chief editor of “Big Data” magazine said that “2018 saw the general increase of Big Data adoption in sciences, healthcare, government, and other industries. Cloud-based predictive analytics help to increase business efficiency, while legal regulations concentrate on security, data governance, and performance stability.”

Tamara Dull, the Director of SAS Best Practices, states “2018 will be a busy year when we will continue to go along the IoT/ML/AI path while trying to understand where it is best to store and process the data, both long-term and short-term. Will it be the on-prem or hybrid cloud, edge computing or private cloud? Time will show.”

William Schmarzo also called “the Dean of Big Data” is a CTO @ Dell EMC Services Big Data. He said that “Big Data analytics will continue to transform from an IT dept task into a business doctrine. The results of correct data analytics can generate millions in added value, while their marginal cost of reuse is literally zero. Big Data has huge economic potential and is a valuable asset — the one we can receive for free with literally no CAPEX or OPEX and must learn to use most efficiently. Big Data is the new sun for the business.”

Mark Van Rijmenam, the founder of Datafloq and internationally renowned Big Data & blockchain strategist, stated that “2018 will be an exciting year when the AI will become more intelligent and ICOs will become the true arms race. Once the AI stops being trained on human data and the last possibilities of biased opinion are eliminated, the businesses will be able to implement the prescriptive analytics and reap the benefits provided by this latest stage of Big Data analytics.”

Matei Zaharia, one of the authors of Apache Spark project and the Chief Technologist at Databricks said that “throughout 2017 and 2018 we saw the stable growth in cloud-based analytics deployment. Multiple cloud vendors constantly improve their existing cloud Big Data offers and increase their range. The industry moves to understand that cloud-native data analytics is not the forklift for the on-prem data stores, but leveraging PAYG billing and serverless computing in addition to scalable cloud storage moves the data analytics to the whole new level.”

Big Data use cases of 2018

These thoughts and opinions were proven to be true, and below I list several business use cases, highlighting the growing adoption of data-driven business analytics.

Using GPUs to process Big Data with superior speed

Standard GPUs are multi-threaded chips with at least 5,000 computing cores intended to do the image rendering, vector processing and high-speed computations required for gaming. Ami Gal, CEO and co-founder of SQream proposed to use GPUs instead of CPUs for high-speed data processing. If the GPU works as intended, but the vectors it processes are actually in a DB with an SQL query on top, this superior speed can be put to good use in data analytics.

The main challenge was to find a DB able to work atop a GPU, and they had to build their own solution for that. Their solution proved to be an efficient approach when a telecom operator with more than 40 million active subscribers wanted to speed up their Business Analytics. The existing MPP Data Warehouse took 1-3 minutes to complete a simple query against a 14 TB database of customer profiles, call records and other data. SQream DB did the job in merely 8 seconds while being able to scale to 40 TBs with ease.

Big Data promises big revenues for oil and gas industry

Geological exploration nowadays had evolved far from simply boring the test apertures in an attempt to locate oil or gas fields. Huge volumes of 2D, 3D, and 4D seismic images are processed to identify the picture of the oil/gas deposits below and maximize the chance of finding productive seismic trace signatures — the sweet spots for boring, which were not identifiable earlier. Thus said, IoT infrastructure, edge computing, ML models and Big Data analytics solutions when combined can minimize the oil & gas industry expenses and maximize its revenues. It even allows using the public weather and geological data to predict oil & gas fields without even performing the costly exploration.

The other important area of Big Data application in the oil and gas industry is maximizing the cost-efficiency ratio of all operations. IoT sensors on the bores and pipes, on the pumping stations and scattered across the oil drilling fields help analyze the efficiency of the equipment used and maximize the ROI. It’s better to replace the bore head for a more sturdy one immediately upon meeting the dense granite layer than pay for the whole equipment repairs and overhaul if the issue is discovered too late.

Security of oil processing is the third area of application, as smart cameras and sensors can analyze the normal oil processing patterns and alert the operators/stop the operations immediately should something go awry. This is a huge leap forward from the post-incident analysis and external alerting systems widely adopted in the earlier days.

Big Data in pharmaceutics: a wide range of applications

Pharmaceutical industry sits on the troves of data and is actively trying to put them to good use through the power of predictive analytics based on Big Data. The use cases in question might include personalized prescriptions, the correct choice of patients for clinical trials, prescription volume correlation, as well as auxiliary activities like logistics, marketing and sales of new medications. The main complication is the strict need to comply with multiple regulations regarding personal data of patients and the fact that sales vastly depend on the physician’s recommendations.

Here are some ways big pharma companies try to overcome these challenges with Big Data.

- A personalized recommendation engine could be built to leverage the 360° view of the patients, including their past conditions, family history, etc. in issuing personalized prescriptions tailored for their specific cases.

- A mechanism of selecting the patients for clinical trials can be vastly improved from the existing bureaucratic approach. If the AI algorithm is able to identify the individuals that can benefit most from the drug tests, or add some underrepresented group that did not previously partake in the research, the value of the final results will be greatly increased.

- Regression networks can be used to test the possible interactions of various chemical concoctions during the experimental drug development. What takes decades nowadays, can be shortened to years or months, while significantly reducing the potential risk to the test patients.

- People don’t buy drugs if they are not ill, and once they do — they buy what doctor prescribes them. As the disease spread is not linear, it is essential to employ predictive analytics to assess how different physicians prescribe the meds and reward the brand advocates to further bolster sales. Such analytics helps pick the hidden fruits instead of engaging in irritating price wars.

- Ongoing training of the ML models involved is required to ensure the predictive analytics is at its maximum efficiency. The cost of error might be a human life, so constantly improving the accuracy of predictions is essential for the pharma industry.

By leveraging all the publicly available data in addition to the internal goldmines of knowledge, the pharmaceutical companies can identify the previously underserved areas of interest and use them to their full potential.

Blockchain and Big Data — a great match

I have described the projects combining blockchain and Big Data nearly a year ago. Since then, multiple pilot projects were launched worldwide in industries like real estate and energy sector, insurance, and financial services, banking and healthcare. Literally, every business sector can benefit from immutable, transparent and consistent data. This also makes data much more valuable, as the analysis is able to detect the patterns and insights that were not observable previously.

Notorious Big Data projects of 2018

Aside from purely business applications, there are multiple prominent Big Data projects that are currently in active development or are just starting. I briefly list such Big Data accomplishments (or revelations) below.

- Nvidia makes their PhysX engine open-source under BSD-3 license to bolster the game development community.

Nvidia moves from physically rendered to AI-generated images usage in their game development initiatives.

- AWS introduces the service for direct data collection from satellites, allowing the businesses to capture the data from their satellites and process it without even leaving their AWS accounts.

- Keras, the machine learning platform for humans, is actively developed by a passionate community. It saw a major update (v2.2.0) in June of 2018 and is consistently improved further.

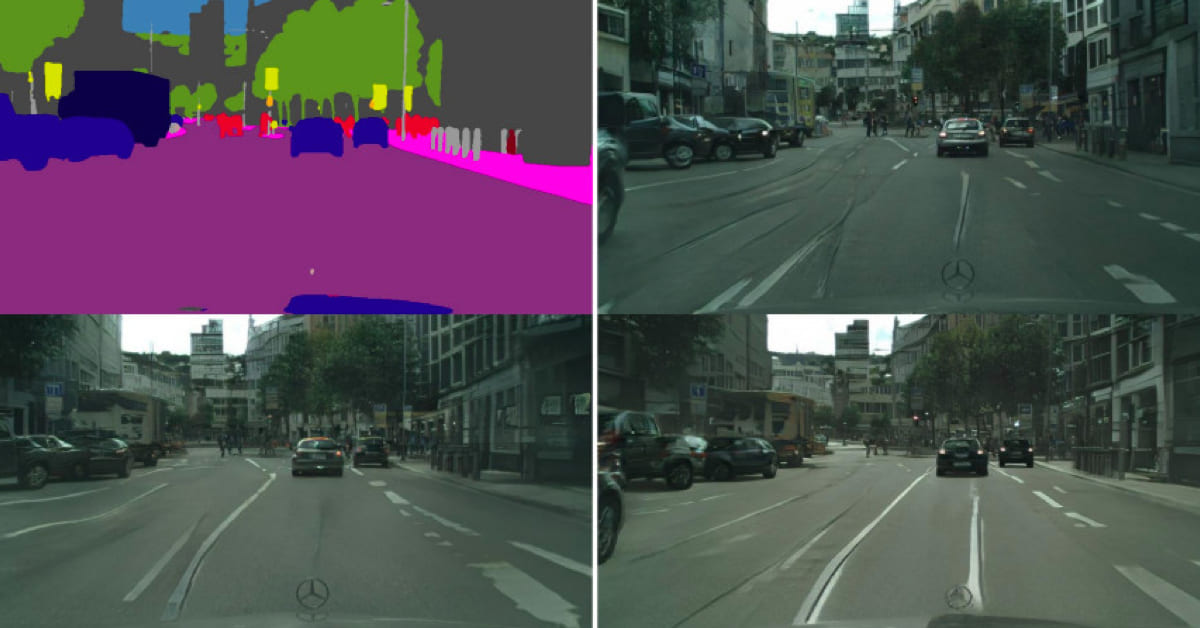

- OpenCV, a popular computer vision algorithm was updated to v4.0.0, providing a multitude of new features, as well as performance improvements.

- Google presents Google Duplex, an innovative service for Google Pixel owners, which enables voice calling and booking hotel rooms, restaurant tables, and other amenities. It might not seem important yet, but it is sure to gain much more traction over the next few years.

Final thoughts on Big Data trends, use cases, and projects of 2018

Like any other important industry, Big Data analytics does not remain idle. It constantly grows and evolves, presenting the businesses with ever-improving tools and approaches to data analysis, enabling the companies of all sizes to leverage the latest tech and best practices to drive more value to their customers. Hopefully, this material will help you better understand the potential of Big Data for your business.

Did I miss anything important in the field of Big Data that occurred in 2018? Please share your thoughts in the comments below!