2017 in Review: Big Data projects and tools

-

3699

-

0

-

27

-

0

As new Big Data technologies and products hit the markets, more and more exciting opportunities arise. Look at what Big Data has to offer in terms of projects and tools in 2017.

Big Data is the basis for successful analytics, artificial intelligence (AI) and machine learning (ML), and a crucial component of the Internet of Things (IoT). Every day new astonishing projects arise and new tools hit the market, so keeping an eye on this all can be quite a challenge. Today we discuss the current state of events in the Big Data industry, the Big Data projects worth taking a closer look at, and the Big Data tools that are gaining traction — or are slowly fading and leaving the scene.

State of events in Big Data industry in 2017

Nowadays Big Data is not exclusively available to giants like AWS or Google, Netflix or Microsoft. Popular cloud service providers create a market for affordable Big Data analytics. Any business can rent only the computational resources they need to perform in-depth Big Data mining and turn their data stores into goldmines of useful business knowledge.

See also: AWS vs MS Azure: which cloud provider to choose

We have already described the Big Data success stories of multiple companies in a series of articles on our blog (Part 1, Part 2), as well as demystifying 5 most popular myths of Big Data and highlighting the reasons to use Big Data before your competitors do it. We have also explained why becoming data-driven helps startups scale and succeed and how using Big Data helps startups grow.

Big Data Projects of 2017

Various Big Data projects caught our attention this year:

- Healthcare.Data science company Apixio leverages Big Data analytics tools to siphon through vast stores of healthcare records, photos and images. Using OCR (Optical Character Recognition algorithm) they index disparate unstructured data and turn it into structured records so that ML models are able to discover patterns and dependencies within that data. This information is crucial for detecting the patterns within such data and developing new treatments.

- Agriculture. As more and more technology makes its way into the agricultural sector, vast arrays of data can be gathered and analyzed. The outcomes of such analysis can lead to better crop yields, more efficient fuel and equipment usage and reduction of chemical pollution (both with fertilizers and pesticides) to the ecosystem. MyJohnDeere.com is the platform for farmers and agricultural businesses, where they can harness their Big Data to generate even more income and optimize their spending.

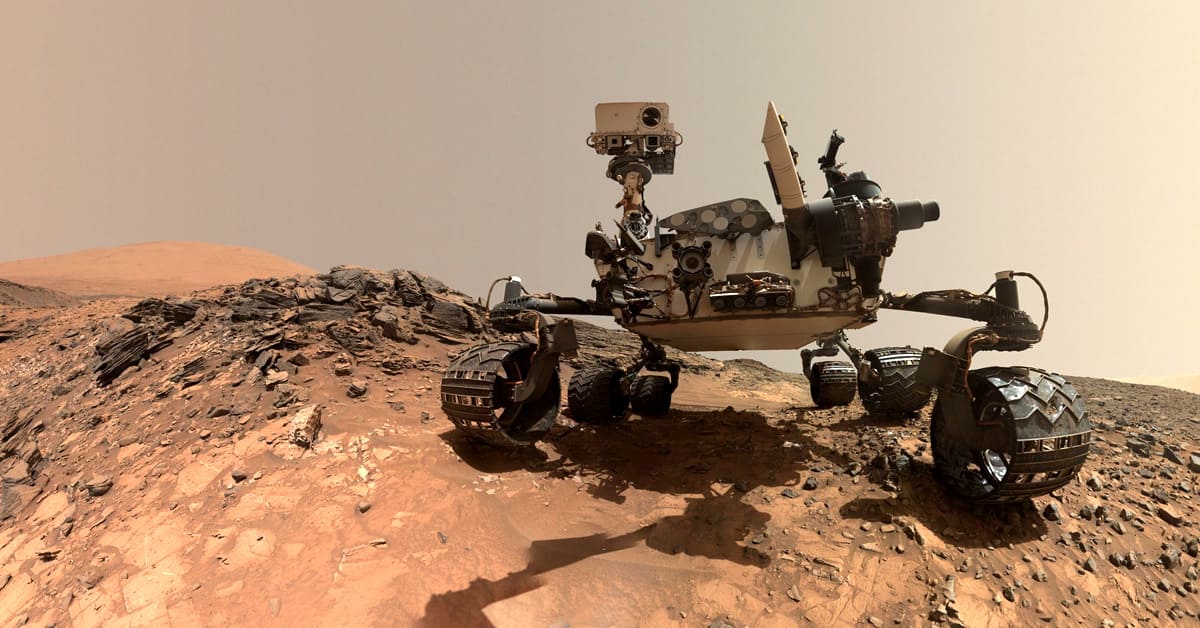

- Mars exploration. Jet Propulsion Labs, the NASA’s division responsible for operating the Curiosity Rover mission on Mars employed ElasticSearch technology. This helps them to efficiently track and monitor several millions of data points sent across more 150,000,000 miles of space, from the Red planet to our homeworld. Due to the transmission lags, the data analysis must be swift to choose the best course of actions for the Rover. The next mission, due 2020 and aimed at discovering the remains of Martian life has ElasticSearch as its core operational technology, as the lags between any trigger and an appropriate action must be minimal for the mission to succeed.

- Prediction of natural disasters. TerraSeismic, a technology company from Jersey, analyzes satellite-generated Big Data from sensors and other data entries to predict natural disasters like earthquakes and tsunamis, as well as helping to prevent man-made catastrophes, like oil or gas line leakages. The same system proved to be quite efficient at safeguarding and monitoring the flow of refugees from multiple military conflict zones since 2004.

- Fighting organized crime. The police actively use data-driven technologies to fight the organized crime. For example, combining the results of the textual analysis software from BasisTech with the CrimeNtel platform from CI Technologies helped track down and arrest the Felony Lane gang that operated across 35 states and caused tens of millions of dollars of damage. The system is currently adopted by law enforcement authorities across the US to increase their efficiency.

- Comfortable daily activities. Netflix and Amazon help you find the needed movies or goods faster, while flight tickets, hotel rooms and car rental can be booked cheaper using the services like Kayak. Big Data analytics open new possibilities for all parties involved and the new horizons emerge daily.

The changes in Big Data toolset in 2017

As Big Data is in place for quite some time, we already have certain Big Data tools (like Hadoop, Storm or Spark) we are used to. Nevertheless, new solutions appear and they are more mature than the ones we were using even a year ago. Thus said, it is high time to put some of these tools on the shelves and never go back to them, embracing new tech instead. Here are the Big Data toolset changes you should have done in 2017 (and some to consider in 2018):

- MapReduce. It is a mule that can get the job done, yet with Spark’s DAG capabilities, it can be done much faster. Thus said, even if you are quite used to working with MapReduce, perhaps it’s time to give Spark a closer look.

- Storm. Hortonworks still supports Storm, yet with the latency issues and multiple low-level bugs infesting it, the product is in deep stagnation. Storm alternatives like Flink and Apex have much cleaner code, lower latency and work better with Spark. Give these tools your attention and you will never ever want to go back to Storm.

- Pig. Pig seemed to become a good PL/SQL for usage with Big Data projects, yet Spark and many other technologies provide the same capabilities while missing all the flaws.

- Java language. Lambda can work with it, yet in the most awkward and cumbersome manner. Python provides much better possibilities in terms of functionality and the ease of scaling, even if it somewhat lacks the performance.

- Flume. The latest release is dated May 20, 2015, and it sure seems to be a bit rusty. There is a steady decline in the number of commits over the years, so the tool is mostly left to die. StreamSets and Kafka offer much better functionality and cleaner code, so it’s better to stick to them.

Final thoughts on Big Data domain in 2017

Big Data is here at last, and it is here to stay. Businesses and startups can now gain affordable access to Big Data analytics and other functionality through lots of services on platforms like AWS, GCP, Azure and the like. Big Data projects disrupt various industries, greatly increasing the productivity and reducing the expenses, while the Big Data tools mature, leaving outdated software behind and enrolling newer, better, more functional and reliable software.

It’s high time to reap the benefits of Big Data analytics for your business or update your existing workflows to correspond the latest trends and use the most advanced tools. Should you need any help with that — just give us a nudge, we are always ready to help!