10 hot trends of Big Data Analytics for 2017

-

4142

-

1

-

35

-

0

When we talk about Big Data analytics, we should first understand why this data is so big and what is the actual need of analyzing it.

The main goal of data science (DS) and Big Data in general is finding patterns and templates within unstructured data flow in order to simplify the data, establish the working templates for further analysis or uncover anomalies (like detecting fraud).

According to Gartner, the data flow is considered truly Big Data when it has three big V’s:

- Volume — the quantity of data flowing to the system within certain time

- Variety — the quantity of data types incoming

- Velocity — the speed of processing this data by the system

The volume of data produced worldwide grows exponentially, which leads to so-called information explosion. The variety of data also grows daily, as dozens of new illnesses, smartphone types, clothing and car models, household goods, etc. appear constantly, ever searching for new means of promotion and marketing channels. Don’t also forget about hundreds of memes and slang words appearing daily. Data velocity is the third thing to keep in mind. There are petabytes of data produced any given day and nearly 90% of it will never be read, not to mention making any use of it.

Thus said, analytics is essential if the business wants to leverage their Big Data stores in order to uncover and make use of that goldmine of knowledge. People tried to analyze this flow of data for quite a long time now, but as the time goes on, some practices become outdated, while some trends are becoming hot.

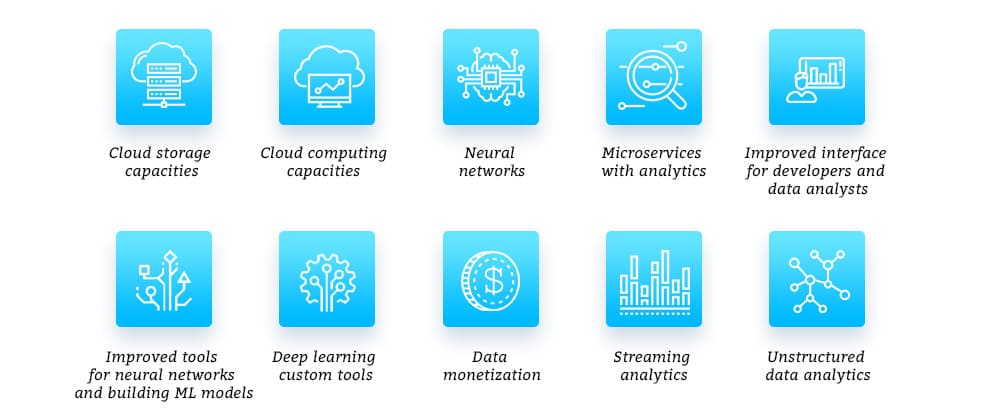

Here are 10 Big Data analytics trends that are really hot in 2017:

- Cloud storage capacities

- Cloud computing capacities

- Neural networks

- Microservices with analytics

- Improved interface for developers and data analysts (R language and Jupyter notebooks)

- Improved tools for neural networks and building ML models, as well as their further training (TensorFlow, MXNet, Microsoft Cognitive Toolkit 2.0, Scikit-learn)

- Deep learning custom tools

- Data monetization

- Streaming analytics

- Unstructured data analytics

We will shortly describe why each of these points is important below.

Cloud storage capacities

As the data the company operates becomes really Big, the costs of storing it become quite serious. As building and maintaining a datacenter is not the investment an average company is not willing to make, renting these resources from Google, Amazon or MS Azure is the obvious solution. Using these services helps solve the volume requirements of the Big Data.

Cloud computing capacities

Once you have sufficient capacities for storing the data, you need enough computational power to process it, in order to provide enough velocity to make the data really worthwhile. As of now, Amazon and Google provide a nice host of services that help build an efficient cloud computing, which any business can use to process their Big Data (Google Cloud, Google APIs, etc.)

Neural networks

Armed with deep learning and other machine learning algorithms, neural networks can excel at huge variety of tasks, from sorting the raw vegetation on a cannery and up to discovering new celestial bodies on the photos made by huge orbital telescopes. Neural networks can analyze the flow of incoming data and highlight the patterns or abnormalities according to preconfigured parameters. This helps greatly automate the analysis and provides powerful tools for finding valuable information in the flow of unstructured data.

Microservices with analytics

Microservices oriented at delivering precise analytics are rapidly growing in size and capabilities. There are tools for measuring everything, checking the ongoing expenditure and alerting of suspicious expends, processing the photos and automatically removing the background or highlighting the important part of the picture, etc. There also are a myriad of other online microservices like the famous Google Translator, which recently suddenly stopped spitting gibberish and began tossing out quite polished texts due to intense addition of various machine learning algorithms. However, as the industry matures, the users expect the developers shifting their efforts to much more complex applications, which will use various machine learning models to analyze the historical data and better notice the patterns in the newly received streaming data.

Improved interface

One of the problems the data analysts or decision makers face nowadays is the absence of convenient GUIs to work on top of the analytics itself. Tools like R language and Jupyter Notebook help to provide convenient representation of the data analytics results. Quite contrary to the Excel spreadsheets, R language scripts can be adjusted and re-run with ease, making them universal and reliable tools. Jupyter is the acronym for Julia, Python and R, the first targets for Notebook kernels. These kernels allow creating documents with live code, data visualizations, equations and explanatory text, thus providing convenient ML development environment.

Improved tools for neural networks and building ML models

Tools like TensorFlow, MXNet, Microsoft Cognitive Toolkit 2.0, and Scikit-learn help the developers and data analysts go away from algorithm-specific approaches like:

- CNN,

- LSTM,

- DNN,

- FNN,

- Bagging method,

- Decision tree method,

- Random forest method.

Instead, these platforms allow solving a specific task using a particular approach, like:

- Recognizing the images on the picture using CNN,

- Evaluating the credibility and reliability of a financial institution customer using the decision tree,

- Correcting the stylistics and grammar in the text using the LSTM network, etc.

All in all, you do not need to become a Ph.D. to get neural networks right nowadays, as there is a multitude of useful tools for building specialized Data Science systems. Tensorflow, for example, is that stands behind all Google’s recent improvements in Translate efficiency and underpins many of the Google services, effectively increasing their value and business impact.

Deep Learning custom tools

As we have explained in our recent article on deep learning, while AI is an umbrella buzzword for machine learning, neural networks, machine intelligence and cognitive computing, it ain’t just as refined as we expect it to be. As of now, it is still unable to explain the reasons of its actions, make moral choices or distinguish between good and bad actions. However, in 2017 the biggest value will be shown by custom deep learning tools, based on simple algorithms repetitively applied against large data sets. Yes, these algorithms will not be almighty, but they will excel in their particular tasks, where consistency of results is much more important than potential insight.

Data monetization

Predictive and prescriptive analytics are the approaches that help organizations minimize expenses and earn money using their Big Data tools. Selling the results of analytics as a service, disseminating and structuring the data for customers can also become a profitable market.

Streaming analytics

Real-time streaming data analysis is essential for making decisions that impact the ongoing events. GPS-locators use this logic to give directions to the destination point. One of the main benefits is that the stream of anonymous data from other users helps avoid the bottlenecks and traffic jams during the route creation. 2017 will definitely introduce more streaming data analytics services, as they are in high demand.

Unstructured data analytics

Constant evolution of data visualization and machine learning algorithms helps constantly make new and new types of data available for analysis. Analysis of video viewing statistics and social media interactions is a goldmine of knowledge, allowing to better personalize the offers and show more relevant ads at more appropriate time, leading to better conversions. In 2017 such services will further evolve and blossom.

Conclusions

All in all, Big Data analytics is booming nowadays and shows no signs of slowing down. While some trends like monthly BI reports, overnight batch analysis, model-centered applications, etc. lose traction, new hot trends appear and gain popularity. What are your thoughts on this? Did we miss something big or include something not-so-important? Please let us know and share the word if this read was interesting!