Top 5 Reasons for Financial Services to Opt for Cloud Computing

-

4324

-

1

-

25

-

0

Cloud computing is the de-facto standard for app deployment nowadays. However, what fits small startups quite often seems not so useful to financial services bodies. Below are 5 reasons to go to the cloud.

What is the main difference between the cloud computing and the on-prem server infrastructure? Cost of operations. To install an app to an on-prem dedicated server the business must pay the following expenses:

- building and maintaining the data center (or a server room, at the very least),

- purchasing the hardware and backup components,

- purchasing the proprietary software systems,

- hiring and training the infrastructure management specialists,

- disaster recovery and DDoS protection.

It’s obvious the upfront investments (CAPEX) in this case are huge, and ongoing infrastructure maintenance (OPEX) is also going to cost quite a sum. The benefits of this approach are also quite tangible:

- The speed of operations, as your employees are in the same network with the servers and are not limited by the ISP bandwidth,

- You don’t have to pay for using the Internet connectivity bandwidth from an ISP,

- Physical security of your hardware.

Keep in mind that the hardware updates and software upgrades, as well as disaster recovery and possible service downtime after some faulty update — all of these nightmares of the pre-cloud era are still there. And for financial services time is money, more than for anybody else.

Cloud computing: a feasible alternative to on-prem servers for financial services

Cloud transition is essentially a decision to lift that CAPEX and responsibility for disaster recovery, software and hardware updates, DDoS protection — and to let the cloud computing service provider handle that! Thus said, one of the main gains after the cloud transition is the safety of mind. But wait, what about the security? Major cloud data leaks are frequently making it to the headlines in the media!

Well, surprisingly enough, according to a Bloomberg report, 25 of 38 world’s leading financial organizations are already in the cloud or in the process of transitioning to it. Perhaps, they don’t look at the naked celebrities photos leaked from iCloud, and instead, trust AWS and Microsoft Azure will take good care of the security issues? There is a solid ground for such assumptions, as the US Department of Defense and CIA has signed a series of contracts on sums of up to $600 million with AWS to provide the cloud computing services for their classified data. In addition, the Microsoft Azure’s home page clearly shows 90% of Fortune 500 companies host their data with Azure.

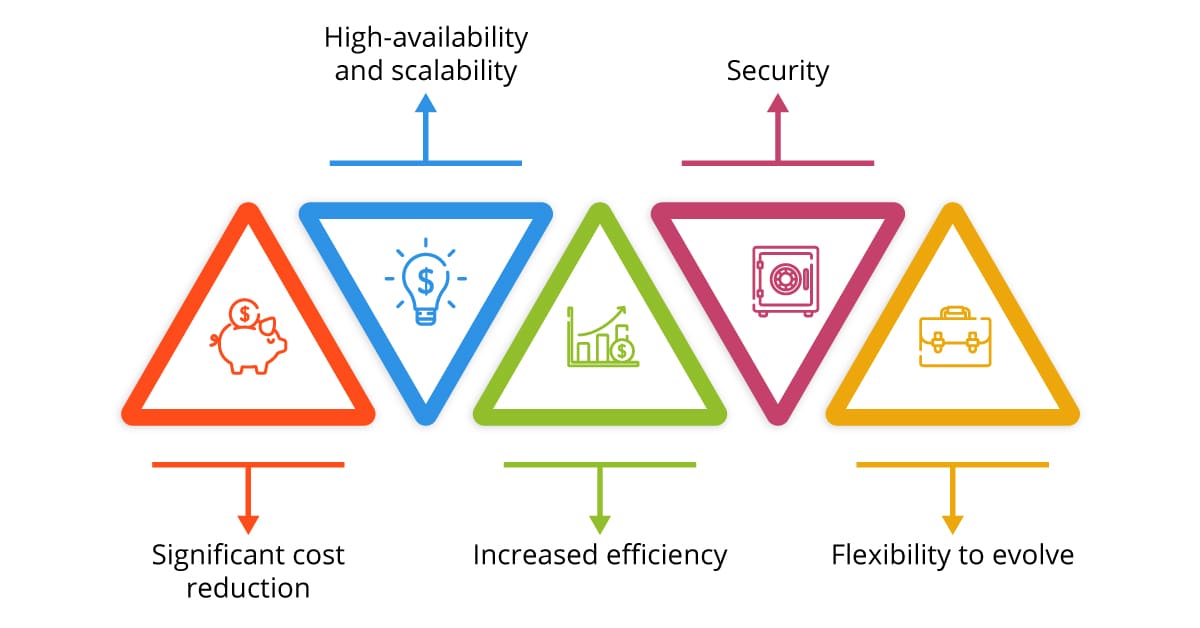

Below are the top 5 reasons for financial services to opt for cloud computing:

- Significant cost reduction. One of AWS case studies shows how one of the largest Australian financial institutions, ME Bank saved up to 75% on their dev and testing environments after moving to the cloud, not to mention the time and payroll savings.

- High-availability and scalability. These are one of the core features of the cloud. You have literally unlimited system resources and can spin up additional instances within seconds, not days. The ability to guarantee 100% uptime for your app is great for the banks — time is money, remember?

- Increased efficiency. The cloud infrastructure serves as the basis for Big Data analytics in banking, as it is comparatively easy to transform the flow of data into actionable business insights, using various Big Data visualization tools. This, in turn, ensures the financial company is able to analyze the customer feedback and apply the changes faster. This is crucial, as 52% of UK SMEs stated the banks were not business-friendly in terms of technology. In addition, 44% of the US Millennials state their banking partners don’t meet their expectations and prefer chatbots to call center employees, according to Forrester.

The customers today are increasingly tech-savvy, and failing to meet their needs will result in the loss of crucial customer audiences.

- Security. There is a widespread misbelief that “cloud is not secure”, which is the reason many financial institutions don’t seriously consider the cloud transition process. As a matter of fact, AWS has already completed a thorough security and compliance check and was proclaimed 100% secure for storing HIPAA data and other sensitive and personal details.This certified cloud service provider has the features like Key Management System for data encryption, as well as built-in security services for data storage and protection. To say more, Cloud Service Provider’s data centers boast the levels of security comparable or exceeding the levels of bank vaults security — and you will pay significantly less for this feature, as compared to keeping the on-site team.

- Flexibility to evolve. Cloud computing is at the forefront of technological innovation, and cloud infrastructure advancements underpin the latest achievements in literally every aspect of our lives, from Augmented Reality / Virtual Reality to PayPass cards, smart homes, Industry 4.0, high-performance data processing solutions, etc.Only by utilizing the latest cloud infrastructure capabilities can the business remain relevant and competitive in the highly digitalized market of tomorrow. This way, you will be able to evolve together with your customer’s preferences and exceed their expectations.

There are certain downsides to moving the IT infrastructure of financial entities to the cloud, of course:

- The business continuity is dependent on Internet connectivity

- Monthly payments for the traffic consumed can be quite large

- It’s not possible to lift-and-shift certain legacy systems to the cloud, so cloud-native analogs have to be built from scratch

These are the reasons many companies decide to go back from the public cloud to on-prem cloud solutions like OpenStack or Oracle Private Cloud solutions. However, this decision should be made once the monthly OPEX of cloud operations become higher than building and operating a private cloud data center. The best part of it all is your business will enjoy

Final thoughts on the reasons for financial companies to opt for cloud computing

As you can see, there are tangible reasons for financial services to opt for cloud computing solutions. This might seem a daunting perspective, yet it is the only viable way to remain competitive in the fast-evolving landscape of the modern business. Moving the corporate workloads to the cloud helps cut the operational expenses, ensure service continuity and scalability, provide robust security features, increased efficiency, and operational flexibility.

The only problem is finding a reliable contractor to make such digital transformation a reality. IT Svit would be glad to assist with this endeavor. We are constantly listed among top-10 Managed Services Providers worldwide and top-3 IT Outsourcing companies in Ukraine, according to international technology agency Clutch. Our expertise gained over 13 years of providing cloud infrastructure support and more than 600 completed projects are at your disposal — contact IT Svit to leverage all the benefits of the transition to the cloud for your business!