Guide to AWS platform migration: AWS migration best practices

-

6336

-

2

-

1

-

0

One of the most common tasks we perform at IT Svit is cloud migration from AWS to GCP, Azure, DigitalOcean and vice versa, or from legacy infrastructure to the cloud.

Cloud infrastructure delivers a multitude of benefits, as compared to dedicated servers. Better computing resources usage, scalability and high-availability for services, granular access control, better security, more cost-efficient expenditure, etc. Thus said, many companies perform their migration to the cloud from dedicated data centers or in-house servers.

However, the cloud transition can pose another danger — vendor lock-in, when the company workflows and IT operations are tied to the peculiar features and services of a single cloud service provider (CSP). For example, if a company builds its operations around using AWS RDS, replacing it with Google Cloud or MS Azure analogs is quite a complicated feat.

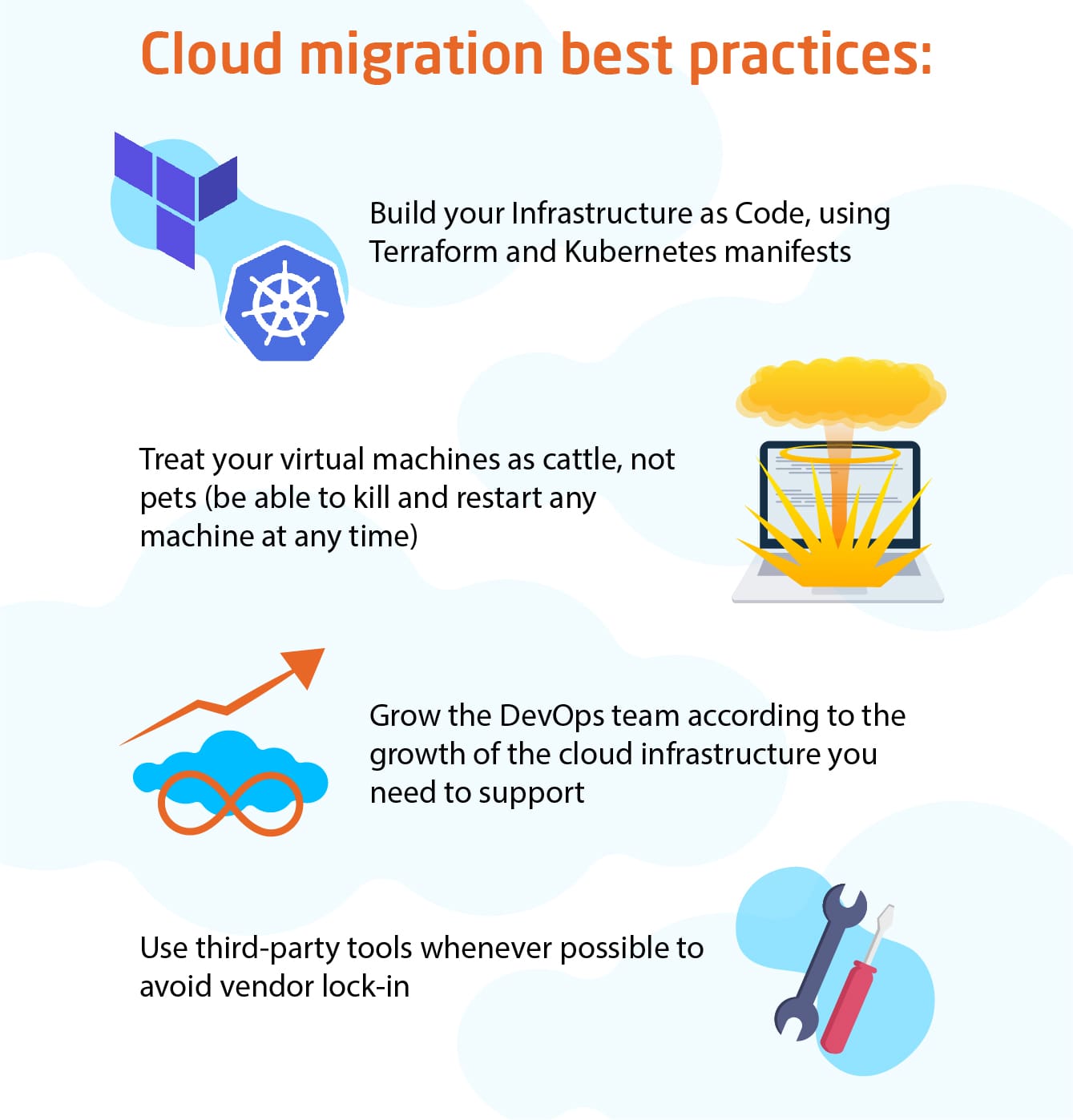

Cloud migration best practices

Thus said, there are certain best practices to follow when performing a migration to the cloud:

- Build your Infrastructure as Code, using Terraform and Kubernetes manifests

- Treat your virtual machines as cattle, not pets (be able to kill and restart any machine at any time)

- Grow the DevOps team according to the growth of the cloud infrastructure you need to support

- Use third-party tools whenever possible to avoid vendor lock-in

Toolkit for AWS infrastructure migration

We take AWS as an example because it is the most dynamically growing, populated and popular CSP. However, there is a variety of reasons why a business might need to migrate to AWS or from it. We describe the tools best used for this purpose.

Infrastructure orchestration: Terraform

The common guilt of all solution architects is the manual configuration of experimental environments. For example, a customer wants a couple of servers provisioned to test the compatibility of their product or the process of integration with another cloud platform. These environments are manually delivered, they work as intended, other tasks are performed — and in a couple of months, none of the support engineers can reproduce the deployment and configuration of these machines.

This is why Terraform should be the cornerstone of modern software development. When all the infrastructure configuration parameters are stored in declarative code within easily adjustable Terraform manifests, each and every state of your systems can be easily reproduced. What is even better, these manifests work across multiple cloud platforms, and minimal changes allow moving from Amazon Web Services to Google Cloud platform — the same manifests work well with both of these providers.

Terraform handles everything, from integration to load balancing and creating network resources. For example, one can easily create a DNS record stored in GCP, which will manage web services at AWS. While every cloud platform has its own configuration manager (AWS Cloud Formation, Google Cloud Deployment Manager or Heat ORchestration Templates for OpenStack), Terraform surpasses them all in efficiency and convenience, as a cloud-agnostic configuration orchestration tool able to work across multiple cloud ecosystems.

Server and configuration management: Ansible

Ansible is our tool of choice for performing cloud migration due to its simplicity and efficiency. It can be run from literally any Unix machine and can connect to literally any service through SSH. When following Ansible best practices, your DevOps team can organize your configuration base to a performant, lean, mean, efficient machine. This enables your ecosystem to be able to run any time, as long as all the packages are up-to-date, and using the correct handlers ensures zero downtime for all updates.

For example, Ansible code base can be used without adjustments on both AWS and GCP. The only things that must be updated are dynamic inventory scripts, written in Python. The rest of the configuration, governed by Ansible playbooks, remains the same, allowing to use Ansible across multiple cloud environments with ease. However, Ansible is not perfect, as it is a push-based system (unlike pull-based Salt or Puppet or Chef). Thus said, it is not perfect for autoscaling, but this can be rectified through additional scripting.

Image creation: Docker/Packer

Virtual Machine Image is the basic building block of the modern cloud infrastructure. Starting it with Docker or Packer allows quickly providing preconfigured infrastructure for running your apps and services. AWS Marketplace provides a huge library of ready AMI images for literally any purpose. However, business use cases are still more diverse than this assortment, so creating a library of images of your testing, staging and production environments is crucial to ensure productivity and stability of your IT operations. Thus said, every business should be able to build and launch images across any cloud platform and region.

Docker and Packer are the tools of choice for creating and operating your image registry. The main benefit of using them in concoction with Terraform and Ansible is the capability to operate smoothly regardless of the underlying cloud infrastructure. Changing only a couple of lines of code allows spinning up your images on AWS or GCP or Azure or DigitalOcean — this way AWS migration becomes much easier and much more manageable.

App containerization: Docker

The next step of managing your apps in the cloud after creating the images is running the containers built from them. This is best done using Docker, as this tool was the first and remains the best approach to app containerization. The apps are packed in neat code containers that include the operating system, all the needed runtime and libraries that allow running the code. The main benefit of this approach is the 100% reliability — Docker containers run anywhere just the same, be it AWS, GCP, Azure, OpenStack or any other infrastructure. Docker containers can be launched on any OS with Docker on it, greatly increasing portability and decreasing operational overhead.

Configuration and container management: Kubernetes

Kubernetes was first developed by Google Cloud Platform but is now released as an open-source tool. Its incredible flexibility and ability to manage containers is unparalleled. All of the major cloud service providers offer Kubernetes-as-a-Service, so having your infrastructure using Kubernetes is a wise choice, as it will definitely fit anywhere, whatever the destination of your cloud migration. Kubernetes allows launching, operating, monitoring, shutting down and restarting clusters, nodes and pods of containers, ensuring stable uptime and ease of scalability for your cloud operations. Google Kubernetes Engine is a great way to start your acquaintance with container configuration tools, and migration from it or to it is described in details in AWS migration service documentation, as well as for other cloud vendors.

User management: JumpCloud

One of the problems of cloud management at scale is the complexity of user management, as each user account must be provided with SSH access to the app, so you must store and manage a plethora of SSH keys. JumpCloud solves this through providing a hosted LDAP/Active Directory service, allowing to spawn users in any database and on any container with ease. Google Compute Engine provides another convenient feature, as the users can use the gcloud command to upload their ssh keys/user pairs from their Google CLI, and these users will be created on the intended machines from the start. This can also be done through a JumpCLoud endpoint, just the same as for AWS, Azure and any other cloud.

Image storage: RexRay

The other thing to consider during AWS migration is porting your library of images to another platform. RexRay is a convenient interface for storing and mounting images into containers. It provides a neat Docker plugin, which makes API calls to AWS, GCP, Azure and other platforms, so changing a pair of lines of code allows migration from AWS EBS volumes to GCP Compute Engine with little manual effort.

Final thoughts: DevOps tools enable smooth AWS migration

Due to using these and other popular DevOps tools IT Svit team is able to perform swift AWS migration from and to any other cloud service provider. All of these tools are designed to work in an ensemble and provide a consistent management layer across any cloud platforms. These instruments help provide smooth and nearly effortless migration between AWS and Google Compute Engine, and our DevOps team is able to perform this task well.

Of course, there are many more user stories, and while some IT Svit customers want to move to AWS, some order migration from AWS to a private cloud or multi-cloud environments. The main thing is that DevOps tools listed above help perform any type of migration. This toolkit can be used for a variety of purposes and IT Svit can engage in a wide range of projects, thanks to investment into correct tooling.

Would you like us to handle AWS migration for you? Let us know, IT Svit is always glad to help!