AI Tech Stack: A Comprehensive Framework for Artificial Intelligence

-

9876

-

27

-

5

-

0

As artificial intelligence reshapes industries and redefines human capabilities, understanding the AI tech stack has become crucial for businesses and technologists alike. AI promises unprecedented levels of automation, insight, and innovation, with the global AI market projected to expand at a staggering 37.3% CAGR from 2023 to 2030. This comprehensive framework, enabling the development, deployment, and maintenance of sophisticated AI systems, is considered a “business advantage” by 72% of leaders.

The complexity of implementation has grown exponentially. The AI tech stack, with its layered approach, offers a structured pathway through this complexity.

This article delves into the components and stages of the modern AI technology stack, offering insights into how businesses can leverage this framework to drive innovation and gain a competitive edge. Whether you’re a business leader exploring AI adoption, or a curious technophile, understanding the AI stack is key to unlocking the full potential of artificial intelligence.

What Are The AI Layers?

The AI technology stack forms the backbone of modern artificial intelligence systems, providing a structural framework that enables the development, deployment, and maintenance of sophisticated AI applications.

Unlike monolithic architectures, the AI stack’s layered approach offers modularity, scalability, and simplified troubleshooting. This innovative structure encompasses critical components such as data ingestion, storage, processing, machine learning algorithms, APIs, and user interfaces. These layers serve as foundational pillars, supporting the intricate web of algorithms, data pipelines, and application interfaces that power AI systems.

Application Layer

At the forefront of the AI tech stack lies the Application Layer, which serves as the primary interface between users and the underlying AI system. This crucial layer encompasses a wide range of elements, from intuitive web applications to robust REST APIs, managing the complex flow of data between client-side and server-side environments. The Application Layer handles essential operations such as capturing user inputs through graphical interfaces, rendering data visualizations on interactive dashboards, and delivering data-driven insights via API endpoints.

Developers often leverage technologies like React for frontend development and Django for backend processes, chosen for their specific advantages in tasks such as data validation, user authentication, and efficient API request routing. By acting as a secure gateway, the Application Layer not only routes user requests to the underlying machine learning models but also maintains stringent security protocols to safeguard data integrity throughout the AI system.

Model Layer

Transitioning deeper into the AI technology stack, we encounter the Model Layer, which serves as the powerhouse of decision-making and data processing. This layer relies on specialized libraries such as TensorFlow and PyTorch, offering a versatile toolkit for a wide range of machine learning activities. From natural language understanding to computer vision and predictive analytics, the Model Layer is where the magic of AI truly happens.

Within this layer, critical processes such as feature engineering, model training, and hyperparameter tuning take place. Data scientists and machine learning engineers meticulously evaluate various algorithms, from traditional regression models to complex neural networks, scrutinizing their performance based on metrics like precision, recall, and F1-score. The Model Layer acts as a crucial intermediary, pulling in data from the Application Layer, performing computation-intensive tasks, and pushing valuable insights back for display or further action.

Infrastructure Layer

This layer is responsible for allocating and managing essential computing resources, including CPUs, GPUs, and TPUs. Scalability, low latency, and fault tolerance are built into this level, often utilizing orchestration technologies like Kubernetes for efficient container management.

Cloud computing plays a significant role in the Infrastructure Layer, with services such as Amazon Web Services’ EC2 instances and Microsoft Azure’s AI-specific accelerators providing the necessary horsepower to handle intensive computations. Far from being a passive recipient of requests, the Infrastructure Layer is an evolving system designed to deploy resources intelligently. It addresses critical aspects such as load balancing, data storage solutions, and network latency, ensuring that processing capabilities are optimized to meet the unique demands of the AI system’s upper layers.

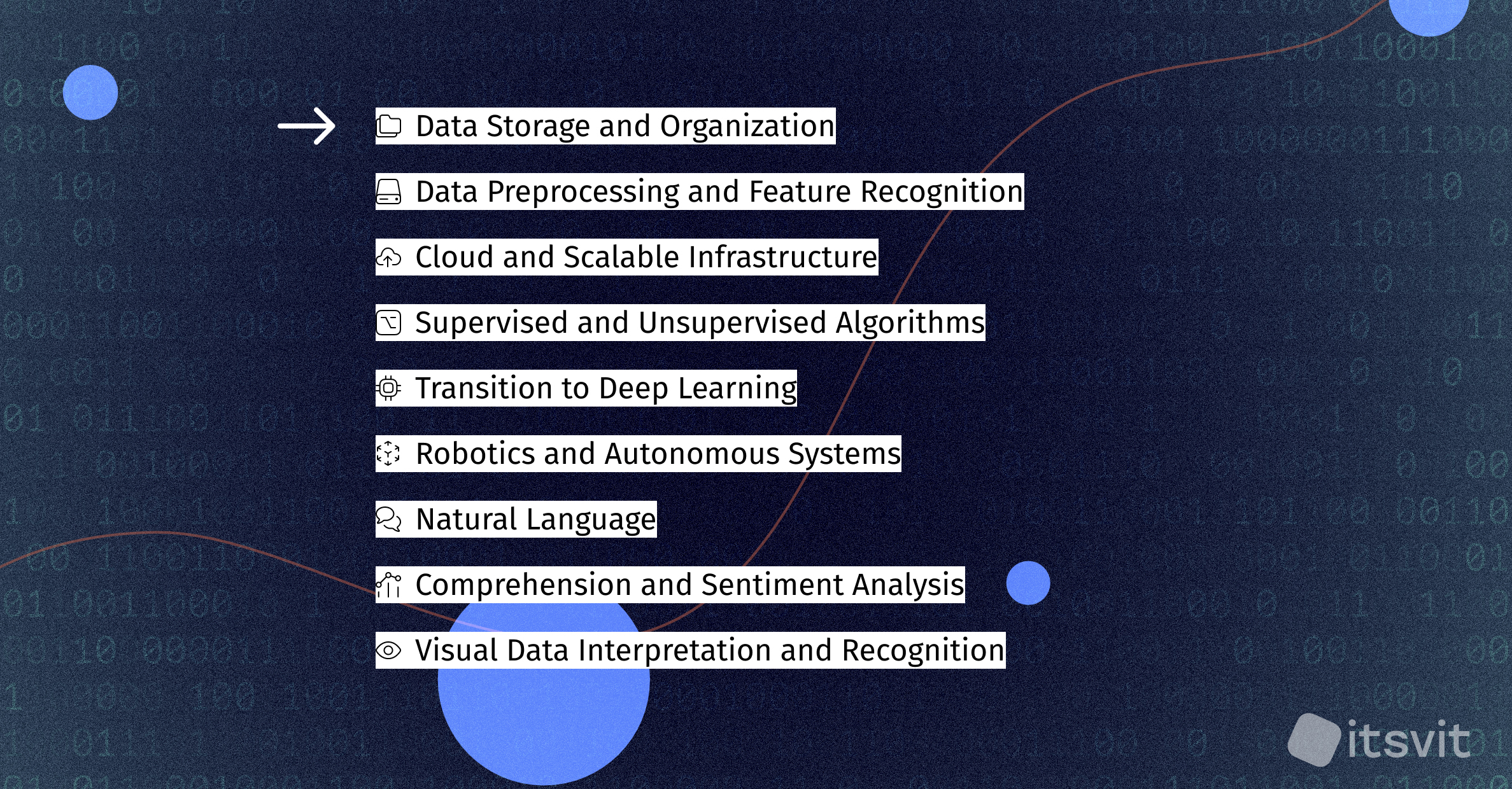

Components Of AI Technology Stack And Their Relevance

The AI tech stack is a robust and multifaceted framework, encompassing various components essential for building, deploying, and maintaining AI systems. Each component plays a crucial role in ensuring the efficiency, scalability, and effectiveness of AI applications. Here, we explore the critical components of the AI stack and their relevance in modern AI development.

Data Storage and Organization

Efficient data storage solutions ensure that vast volumes of structured and unstructured data are securely maintained and readily accessible. Modern AI applications leverage a variety of technologies, including SQL and NoSQL databases, data lakes, and cloud storage platforms, to manage and organize data effectively. Proper data organization facilitates seamless data retrieval, which is paramount for training sophisticated AI models. By implementing robust data storage solutions, organizations not only enhance data accessibility but also ensure data integrity and security, which are crucial for generating reliable AI outcomes.

Data Preprocessing and Feature Recognition

Data preprocessing and feature recognition represent critical steps in the AI pipeline, transforming raw data into valuable insights. Raw data often contains noise, inconsistencies, and irrelevant information that must be cleaned and transformed into a suitable format for analysis. This process involves various techniques, including data normalization, handling missing values, and encoding categorical variables.

Feature recognition, a key aspect of this component, involves identifying the most relevant attributes of the data that will contribute to model accuracy. Data scientists employ advanced tools and libraries such as Pandas, Scikit-learn, and TensorFlow Data Validation to perform these tasks efficiently. By implementing robust preprocessing and feature recognition techniques, organizations can significantly enhance model performance, reduce computational costs, and improve the overall effectiveness of their AI systems.

Cloud and Scalable Infrastructure

Cloud and scalable infrastructure form an integral part of the modern AI tech stack, providing the computational power and flexibility required for training and deploying sophisticated AI models. Leading cloud platforms such as Amazon Web Services, Google Cloud, and Microsoft Azure offer scalable resources, including virtual machines, GPUs, and TPUs, which are essential for handling the intensive computations inherent in AI workloads.

Scalable infrastructure ensures that AI systems can handle increasing amounts of data and user requests without compromising performance. This capability is particularly crucial for enterprises deploying AI solutions in production environments, where the ability to scale rapidly in response to changing demands can provide a significant competitive advantage.

Supervised and Unsupervised Algorithms

At the heart of AI model development lie supervised and unsupervised algorithms, each serving distinct yet complementary purposes in extracting insights from data. Supervised learning involves training models on labeled data, where the outcomes are known, to make predictions or classify new data points. Common supervised algorithms include linear regression, decision trees, and support vector machines, each offering unique strengths for different types of problems.

Unsupervised learning, conversely, deals with unlabeled data, aiming to uncover hidden patterns or groupings within the dataset. Clustering algorithms like K-means and hierarchical clustering are widely used in unsupervised learning scenarios. Both types of algorithms are foundational to any AI application, enabling organizations to address a wide range of business challenges, from predictive maintenance to customer segmentation.

Transition to Deep Learning

Deep learning, a subset of machine learning, utilizes neural networks with multiple layers to model complex patterns in large datasets. Frameworks such as TensorFlow and PyTorch have become instrumental in facilitating the development of sophisticated deep learning models. These tools provide researchers and practitioners with powerful abstractions and optimized computational graphs, enabling the creation of increasingly complex neural network architectures.

The transition to deep learning has enabled AI systems to achieve unprecedented levels of accuracy and performance, making it a crucial component of modern AI technology. Organizations leveraging deep learning can tackle previously intractable problems, from real-time language translation to advanced anomaly detection in cybersecurity.

Robotics and Autonomous Systems

The integration of AI in robotics enhances operational efficiency and opens new possibilities in various sectors, including manufacturing, healthcare, and transportation. Robotics and autonomous systems rely heavily on real-time data processing and adaptive learning to navigate and interact with their environment effectively. This requires a seamless integration of various AI technologies, including computer vision, natural language processing, and reinforcement learning. The integration of AI in robotics enhances operational efficiency and opens new possibilities in various sectors, including manufacturing, healthcare, and transportation.

Natural Language Comprehension and Sentiment Analysis

Natural language comprehension and sentiment analysis have become critical components of the AI tech stack, enabling machines to understand and interact with human language in increasingly sophisticated ways. Natural language processing (NLP) techniques empower AI systems to comprehend, interpret, and generate human language, bridging the communication gap between humans and machines.

Sentiment analysis, a subset of NLP, involves identifying and categorizing emotions or opinions expressed in textual data. This capability has become invaluable for businesses seeking to understand customer feedback, monitor brand perception, and gauge public opinion on various topics.

Advanced tools like spaCy, NLTK, and BERT have become staples in the NLP toolkit, offering powerful capabilities for tasks such as text classification, named entity recognition, and language translation. These technologies are essential for applications such as chatbots, customer feedback analysis, and social media monitoring, where understanding human language is crucial for delivering personalized and context-aware responses.

Visual Data Interpretation and Recognition

Visual data interpretation and recognition have emerged as key components of the AI tech stack, enabling systems to analyze and understand visual inputs such as images and videos with remarkable accuracy. Computer vision techniques are employed to extract meaningful information from visual data, facilitating tasks like object detection, image classification, and facial recognition. Technologies like convolutional neural networks (CNNs) and libraries such as OpenCV and TensorFlow are instrumental in developing these capabilities. Visual data interpretation is vital for applications in security, healthcare, autonomous vehicles, and more, where accurate and efficient analysis of visual information is essential.

AI Tech Stack: Essential for AI Success

The AI technology stack is a critical framework for achieving success in artificial intelligence, combining various components that work together to deliver robust, scalable, and efficient AI solutions. Each element within the stack plays a pivotal role in ensuring that AI applications meet the high standards required by modern enterprises. From machine learning frameworks to cloud resources, every aspect contributes to the seamless operation and effectiveness of AI systems.

Machine Learning Frameworks

Machine learning frameworks are the backbone of AI model development, providing the necessary tools and libraries to build, train, and deploy machine learning models. Frameworks like TensorFlow, PyTorch, and Scikit-learn are indispensable for their ability to handle complex mathematical computations, streamline the development process, and offer extensive community support. With extensive experience in developing and deploying machine learning models, IT Svit ensures that each solution is optimized for performance and scalability, delivering precise and reliable results.

Programming Languages

Programming languages are fundamental to AI development, enabling the creation of algorithms, data processing scripts, and integration of AI models into applications. Python is the most popular language for AI due to its simplicity, readability, and extensive library support. Other languages like R, Java, and C++ are also used for specific tasks that require specialized performance or integration capabilities. IT Svit’s team of skilled developers utilizes these programming languages to write efficient, maintainable code that forms the core of AI solutions. Their expertise ensures that the right language is chosen for each task, optimizing the development process and enhancing the overall functionality of the AI system.

Cloud Resources

Cloud resources provide the necessary infrastructure for AI development and deployment, offering scalable, flexible, and cost-effective solutions. Platforms like AWS, Google Cloud, and Microsoft Azure offer a range of services, including virtual machines, GPUs, TPUs, and AI-specific tools that support the entire AI lifecycle. IT Svit, recognized as an AWS Select Partner, excels in leveraging cloud resources to build and deploy AI solutions. Their deep understanding of cloud infrastructure allows them to design and implement scalable AI systems that can handle large datasets and computationally intensive tasks, ensuring optimal performance and cost efficiency.

Data Manipulation Utilities

Data manipulation utilities are crucial for preparing and processing data, a fundamental step in the AI pipeline. Tools like Pandas, NumPy, and Apache Spark facilitate efficient data cleaning, transformation, and analysis, enabling the extraction of valuable insights from raw data. IT Svit’s expertise in data manipulation ensures that data is accurately prepared and ready for use in machine learning models. By utilizing these utilities, they enhance the quality and reliability of the data, which directly impacts the performance of the AI models. Their meticulous approach to data preparation ensures that AI solutions are built on a solid foundation of high-quality data.

Stages of the Modern AI Tech Stack

The modern AI tech stack is a structured framework designed to facilitate the development, deployment, and operation of AI systems. It consists of multiple stages, each encompassing specific tasks and components critical to building effective AI solutions. These stages ensure that AI applications are developed systematically, with each layer contributing to the overall performance and scalability of the system. Here are the key stages of the modern AI tech stack:

Data Collection and Ingestion

The first stage involves gathering raw data from various sources such as databases, APIs, sensors, and user interactions. This data can be structured, semi-structured, or unstructured and is often stored in data lakes or cloud storage solutions. Efficient data collection and ingestion are crucial for providing the foundation upon which AI models are built. Tools like Apache Kafka, Flume, and cloud-based data ingestion services like AWS Glue or Google Cloud Dataflow are commonly used at this stage. These tools help in real-time data streaming, batch processing, and integration with various data sources, ensuring that the data is captured accurately and efficiently.

Data Storage and Management

Once data is collected, it must be stored and managed effectively to ensure accessibility, security, and scalability. This stage involves implementing robust databases, data warehouses, and data lakes capable of handling large volumes of diverse data types. Organizations often leverage a combination of SQL databases (e.g., MySQL, PostgreSQL), NoSQL databases (e.g., MongoDB, Cassandra), and cloud storage solutions (e.g., AWS S3, Google Cloud Storage) to store and organize data for subsequent processing.

For analytical querying and reporting, data warehouses like Amazon Redshift and Google BigQuery have become indispensable tools in the AI tech stack. Proper data storage and management ensure that data is readily available for analysis and model training while maintaining data integrity and security.

Data Preprocessing and Cleaning

Data preprocessing and cleaning are essential steps to ensure the quality and consistency of the data. This stage involves tasks such as removing duplicates, handling missing values, normalizing data, and transforming data into a suitable format for analysis. Tools and libraries like Pandas, NumPy, and Apache Spark are used to preprocess and clean the data, preparing it for feature engineering and model training. Data cleaning ensures that the dataset is free from errors and inconsistencies, while preprocessing transforms the data into a structured format that machine learning algorithms can easily interpret.

Feature Engineering and Selection

Feature engineering and selection form a crucial stage in the AI development process, involving the creation of new features or the selection of existing ones that are most relevant for the AI model. This phase is critical for improving model accuracy and performance, as it directly influences the quality of insights that can be extracted from the data.

Data scientists employ various techniques to engineer features, including scaling, encoding categorical variables, and creating interaction features. Tools like Scikit-learn and Featuretools assist in this process, providing powerful capabilities for automated feature engineering and selection. By carefully crafting and selecting features, organizations can enhance their models’ ability to learn from data, reduce overfitting, and improve generalization to new, unseen data.

The subsequent stages of model development, evaluation, deployment, and maintenance build upon these foundational steps, culminating in the creation of robust, effective AI systems. Each stage in the modern AI tech stack plays a vital role in ensuring that AI applications meet the high standards required in today’s competitive landscape.

Model Development and Training

This phase involves building and training machine learning models using a variety of algorithms and frameworks tailored to specific business needs and data characteristics. Data scientists and machine learning engineers employ popular frameworks such as TensorFlow, PyTorch, and Scikit-learn to develop and train sophisticated models.

The process begins with choosing appropriate algorithms based on the problem at hand, whether it’s classification, regression, clustering, or more complex tasks. Hyperparameter tuning is a critical aspect of this stage, involving the optimization of model parameters to enhance performance. Advanced techniques like cross-validation and grid search are employed to find the optimal configuration.

Model evaluation metrics such as accuracy, precision, recall, and F1-score guide the iterative improvement process. This stage also involves splitting the dataset into training and testing sets, ensuring that the model is evaluated on unseen data to assess its real-world performance accurately. The goal is to create models that not only perform well on training data but also generalize effectively to new, unseen data.

Model Evaluation and Validation

After training, models must be evaluated and validated to ensure they perform well on unseen data. This stage involves splitting the data into training and validation sets, using cross-validation techniques, and assessing the model’s performance. Tools and libraries like Scikit-learn and Keras provide functionalities for model evaluation and validation. Metrics such as ROC-AUC, confusion matrix, and precision-recall curves are used to understand the model’s behavior and identify areas for improvement. Proper evaluation and validation ensure that the model is robust and can generalize well to new data, minimizing the risk of overfitting.

Model Deployment

Once a model is thoroughly trained and validated, it needs to be deployed into a production environment where it can process real-time data and generate valuable insights or predictions. This stage involves integrating the model with the application layer, setting up APIs for model inference, and ensuring the model can scale to handle user requests efficiently.

Deployment platforms like TensorFlow Serving, AWS SageMaker, and Docker have become integral tools in this stage, offering robust solutions for model serving and scaling. These platforms enable seamless integration of machine learning models into existing IT infrastructure, ensuring high availability and performance.

Monitoring and Maintenance

Model monitoring and maintenance are ongoing tasks that ensure the AI system continues to perform optimally after deployment. This stage involves tracking model performance, detecting anomalies, and retraining models as necessary to adapt to new data or changing conditions.

Advanced monitoring tools like Prometheus and Grafana, along with custom monitoring solutions, are employed to keep a vigilant eye on the AI system’s performance. Key metrics such as prediction accuracy, latency, and throughput are constantly monitored to identify any degradation in performance or potential issues.

Continuous Integration and Continuous Deployment (CI/CD)

The final stage involves implementing robust CI/CD practices to automate the development, testing, and deployment of AI models. This approach ensures that updates and improvements to the AI system are deployed efficiently and reliably, minimizing downtime and reducing the risk of errors associated with manual deployments.

CI/CD tools such as Jenkins, GitLab CI, and CircleCI are commonly used to create automated pipelines that handle code integration, model testing, and deployment to production environments. These pipelines typically include automated testing frameworks that ensure new code changes or model updates do not introduce errors or degrade performance.

IT Svit: Your Reliable AI Development Partner

Choosing the right AI development partner is crucial. With over 18 years of experience and a team of 200+ certified professionals, IT Svit is a reliable choice for businesses seeking to leverage AI technologies.

Why choose IT Svit?

- Extensive Expertise: IT Svit excels in AI technologies like TensorFlow, PyTorch, Python, and R, handling projects from predictive models to deep learning applications.

- Comprehensive Services: Offering end-to-end AI services, IT Svit specializes in custom solutions, ensuring actionable insights and tangible results.

- Scalable Infrastructure: As an AWS Select Partner, IT Svit uses cloud technologies to provide scalable AI solutions, efficiently managing large datasets and complex computations.

- Proven Success: With a strong portfolio of successful projects across various industries, IT Svit consistently delivers high-quality results on time and within budget.

- Customized Solutions: Tailoring AI models to client needs, IT Svit ensures seamless integration with existing systems for maximum impact and ROI.

- Security and Compliance: Prioritizing security and compliance, IT Svit ensures all AI solutions meet industry standards and regulations, protecting your data.

Ready to transform your business with AI? Contact IT Svit today to discuss your project and discover how our expertise can drive your success. Let’s innovate together!